In February the WSJ graphics team put together a series of interactive visualisations on the impact of vaccination that blew up on twitter and facebook, and were roundly lauded as great-looking and effective dataviz. Some of these had enough data available to look particularly good, such as for the measles vaccine:

How hard would it be to recreate an R version?

Base R version

Quite recently Mick Watson, a computational biologist based here in Edinburgh, put together a base R version of this figure using heatmap.2 from the gplots package.

If you’re interested in the code for this, I suggest you check out his blog post where he walks the reader through creating the figure, beginning from heatmap defaults.

However, it didn’t take long for someone to pipe up asking for a ggplot2 version (3 minutes in fact…) and that’s my preference too, so I decided to have a go at putting one together.

ggplot2 version

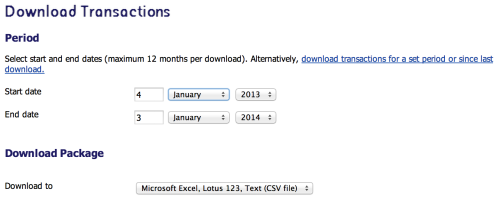

Thankfully the hard work of tracking down the data had already been done for me, to get at it follow these steps:

- Register and login to “Project Tycho“

- Go to level 1 data, then Search and retrieve data

- Now change a couple of options: geographic level := state; disease outcome := incidence

- Add all states (highlight all at once with Ctrl+A (or Cmd+A on Macs)

- Hit submit and scroll down to Click here to download results to excel

- Open in excel and export to CSV

Simple right!

Now all that’s left to do is a bit of tidying. The data comes in wide format, so can be melted to our ggplot2-friendly long format with:

measles <- melt(measles, id.var=c("YEAR", "WEEK"))

After that we can clean up the column names and use dplyr to aggregate weekly incidence rates into an annual measure:

colnames(measles) <- c("year", "week", "state", "cases")

mdf <- measles %>% group_by(state, year) %>%

summarise(c=if(all(is.na(cases))) NA else

sum(cases, na.rm=T))

mdf$state <- factor(mdf$state, levels=rev(levels(mdf$state)))

It’s a bit crude but what I’m doing is summing the weekly incidence rates and leaving NAs if there’s no data for a whole year. This seems to match what’s been done in the WSJ article, though a more intepretable method could be something like average weekly incidence, as used by Robert Allison in his SAS version.

After trying to match colours via the OS X utility “digital colour meter” without much success, I instead grabbed the colours and breaks from the original plot’s javascript to make them as close as possible.

In full, the actual ggplot2 command took a fair bit of tweaking:

ggplot(mdf, aes(y=state, x=year, fill=c)) +

geom_tile(colour="white", linewidth=2,

width=.9, height=.9) + theme_minimal() +

scale_fill_gradientn(colours=cols, limits=c(0, 4000),

breaks=seq(0, 4e3, by=1e3),

na.value=rgb(246, 246, 246, max=255),

labels=c("0k", "1k", "2k", "3k", "4k"),

guide=guide_colourbar(ticks=T, nbin=50,

barheight=.5, label=T,

barwidth=10)) +

scale_x_continuous(expand=c(0,0),

breaks=seq(1930, 2010, by=10)) +

geom_segment(x=1963, xend=1963, y=0, yend=51.5, size=.9) +

labs(x="", y="", fill="") +

ggtitle("Measles") +

theme(legend.position=c(.5, -.13),

legend.direction="horizontal",

legend.text=element_text(colour="grey20"),

plot.margin=grid::unit(c(.5,.5,1.5,.5), "cm"),

axis.text.y=element_text(size=6, family="Helvetica",

hjust=1),

axis.text.x=element_text(size=8),

axis.ticks.y=element_blank(),

panel.grid=element_blank(),

title=element_text(hjust=-.07, face="bold", vjust=1,

family="Helvetica"),

text=element_text(family="URWHelvetica")) +

annotate("text", label="Vaccine introduced", x=1963, y=53,

vjust=1, hjust=0, size=I(3), family="Helvetica")

Result

I’m pretty happy with the outcome but there are a few differences: the ordering is out (someone pointed out the original is ordered by two letter code rather than full state name) and the fonts are off (as far as I can tell they use “Whitney ScreenSmart” among others).

I’m pretty happy with the outcome but there are a few differences: the ordering is out (someone pointed out the original is ordered by two letter code rather than full state name) and the fonts are off (as far as I can tell they use “Whitney ScreenSmart” among others).

Obviously the original is an interactive chart which works great with this data. It turns out it was built with the highcharts library, which actually has R bindings via the rCharts package, so in theory the original chart could be entirely recreated in R! However, for now at least, that’ll be left as an exercise for the reader…